PyTorch on Twitter: "FP16 is only supported in CUDA, BF16 has support on newer CPUs and TPUs Calling .half() on your network and tensors explicitly casts them to FP16, but not all

FP16 Throughput on GP104: Good for Compatibility (and Not Much Else) - The NVIDIA GeForce GTX 1080 & GTX 1070 Founders Editions Review: Kicking Off the FinFET Generation

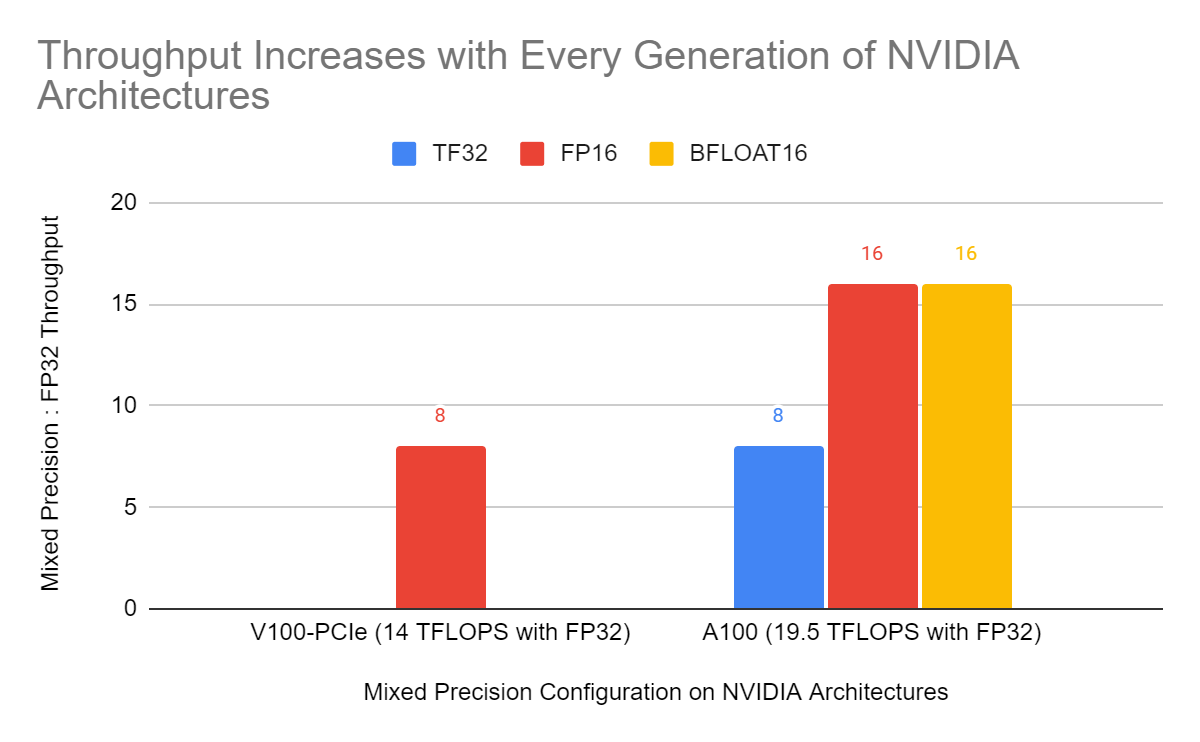

A Shallow Dive Into Tensor Cores - The NVIDIA Titan V Deep Learning Deep Dive: It's All About The Tensor Cores

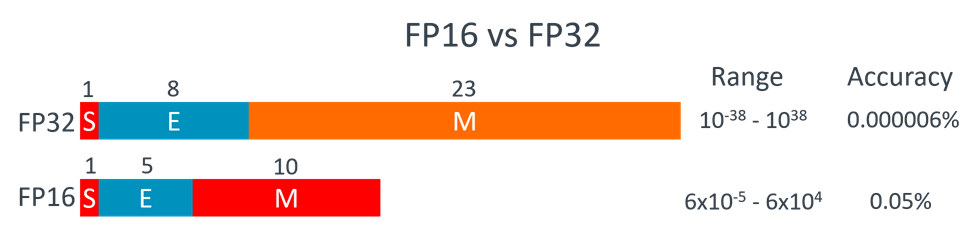

The differences between running simulation at FP32 and FP16 precision.... | Download Scientific Diagram

![RFC][Relay] FP32 -> FP16 Model Support - pre-RFC - Apache TVM Discuss RFC][Relay] FP32 -> FP16 Model Support - pre-RFC - Apache TVM Discuss](https://discuss.tvm.apache.org/uploads/default/original/2X/4/450bd810eb5b388a3dc4864b1bdd5f78cd01d2dc.png)